Snowflake and Databricks are competitive and similar in many ways. Snowflake however primarily was built around using a proprietary storage concept in infrastructure managed by Snowflake. Snowflake offers robust developer tools and flexible compute offerings separate from storage. Recently Snowflake has expanded to embrace the Lakehouse architecture by offering native support for Iceberg tables and open sourcing their Polaris catalog. Snowflake also offers a notebook interface and the ability to run Spark or Python, though their primary strength is via SQL. Snowflake is a more focused set of features that are easier to use and thus has a lower barrier to entry than Databricks. To put it simply, Snowflake is historically a data warehouse and is easier to use, but is investing in Lakehouse offerings whereas Databricks has always been a Lakehouse offering but is investing in making it easier to use.

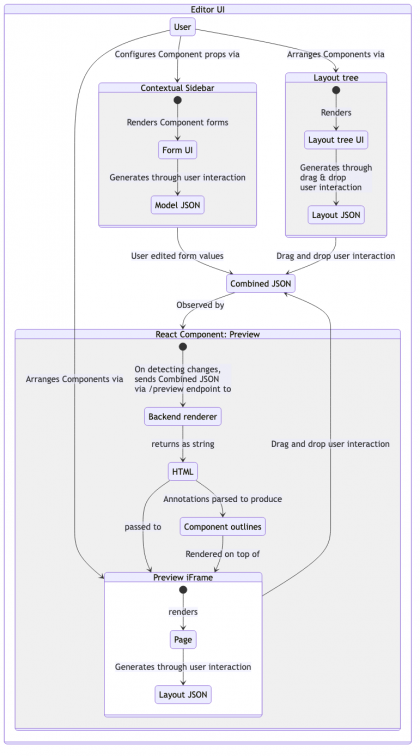

Lakehouse architecture provides a streamlined way to analyze both structured and unstructured data, making use of open table formats to simplify analysis across platforms. Open table formats are designed to enhance the interoperability, flexibility, and efficiency of data processing systems by using predictable storage formats that are not specific to the technologies that access them. Different formats are specialized for different types of processing operations, including leading solutions, Delta Lake, Iceberg, and Parquet.

Lakehouse Architecture

Iceberg tables and Delta tables are both designed to handle large-scale data management with features like ACID transactions, schema evolution, and partitioning, but they have distinct differences. Iceberg, an Apache project, supports multiple file formats and offers flexible schema evolution and hidden partitioning, making it suitable for complex data operations and integration with various data processing engines like Apache Spark and Flink. Delta tables, open source but primarily developed by Databricks, emphasize schema enforcement and tight integration with the Databricks ecosystem, providing optimized performance and seamless cloud integration. Businesses must handle both Delta and Iceberg formats to effectively manage customer data and maximize its potential.

Open Table Formats

In recent years, the explosive growth of businesses seeking to harness the power of big data has spurred the development of various platforms to store, manage, and analyze data in the cloud. These platforms offer a suite of tools useful for data engineering and real-time analytics, such as notebook interfaces, managed and auto-scaling compute functionality, security governance, and related functions. Major cloud data platforms, such as Databricks, Snowflake, Fabric, and BigLake, have emerged as frontrunners, each offering unique features and capabilities. to meet the data needs of different firms, teams, or stakeholders.

Lakehouse Customer Data Platform

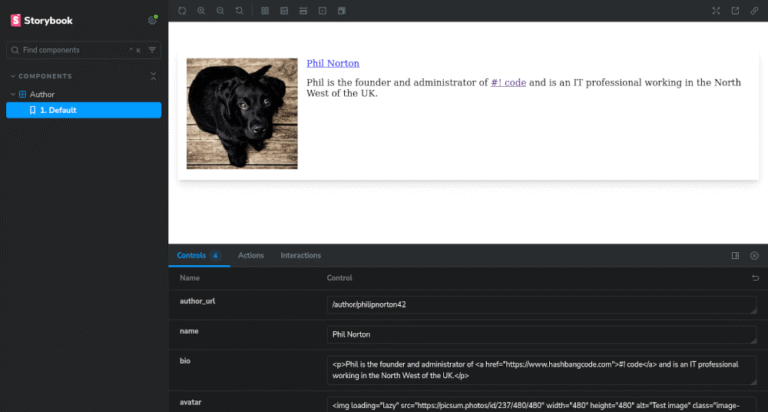

Databricks offers the broadest array of features, like robust notebook functionality, flexible compute, AI toolkits and a massive network of partner applications. The platform is arguably the original founder of Lakehouse principles and is built from the ground up by using the open source Delta Lake library for storage, offering support for Delta Tables and Delta Sharing. Databricks flexibly supports all open format data storage, including Iceberg. Data access is based primarily on Apache Spark under the hood. This makes it flexible and useful for analysts, data scientists, and operational use cases but can be difficult for non-technical or low-code end users to adopt, meaning bigger investments in training and hiring skilled staff.

Comparing Iceberg Tables and Delta Tables

BigLake

Comparing Major Cloud Data Platforms in Practical Terms

A key pain across the industry has been “silos” and the core driving reason for those silos come from maintaining many copies in proprietary formats. As the industry embraces cross-platform access and open standards, moving away from vendor lock-in towards Lakehouse-driven interoperability is key for brands. A unified platform coordinating the use of multiple platforms will be crucial for businesses hoping to be at the forefront of customer data management.

Being an extension of BigQuery, BigLake defaults to storing data in a proprietary format, but allows for direct use of tables stored in other locations which may be of Delta, Iceberg or other open formats. Put simply, BigLake is Google expanding BigQuery to offer Lakehouse features and better support for open source formats.

Databricks

Choosing the right cloud data platform is crucial for businesses looking to maximize their data potential. Companies need to have a clear and thorough understanding of their own data needs – the volume, variety, scalability and performance requirements – along with the strengths and capabilities of each platform.

Fabric

BigLake is Google’s offering, and in essence represents their BigQuery service, adapted for the Lakehouse. BigLake features scalable storage with a focus on governance and use of standard table formats, which are all Lakehouse features, as well as facilitating interoperability and access control, in general. It makes use of both open and closed source formats, unlike the other platforms, doing so to provide full BigQuery functionality, some of which is closed source.

The choice of a cloud data platform is a critical decision that can significantly impact an organization’s ability to leverage data effectively. Each platform offers unique strengths and capabilities, catering to different needs and preferences. By understanding the key features of these platforms, recognizing the importance of Lakehouse architecture, and considering specific business requirements, organizations can make informed decisions that align with their data strategy and drive success in the data-driven era.

Snowflake

Before comparing these top data platforms, however, it’s important to recognize what they have in common: a commitment to the principles of Lakehouse architecture. Lakehouse architecture is a cutting-edge approach to data management that unifies diverse data types and workloads within a single platform without the need for copying data. It offers scalable storage and robust data processing capabilities, ensuring data reliability and consistency through advanced transactional support.

Cross-Platform, Interoperability is Key

About Caleb Benningfield

Fabric is Microsoft’s offering, built with unifying data across hybrid and multi-cloud environments in mind, with a specialized focus on Azure services. The platform distinguishes itself by its ease of use, and can be quickly adopted by end users untrained in data science for whom Snowflake and Databricks are inappropriate. Most governance and access-related concerns are either handled automatically or presented in simplified, legible form, which can save users significant effort but means other tools are better suited for fine-tuning access control. Fabric does not champion a specific format, instead offering uniform support of Delta, Iceberg, parquet along with most other table formats, though, like Databricks, it makes use of Apache Spark under the hood.

A Lakehouse Customer Data Platform (CDP) leverages the strengths of Lakehouse architecture to unify and streamline customer data management across various cloud data platforms and create a comprehensive, real-time view of customer data. A Lakehouse CDP works by combining the flexibility and scalability of cloud data platforms with advanced data processing and analytics capabilities. By leveraging open table formats like Delta and Iceberg, a Lakehouse CDP enables businesses to integrate and analyze customer data from multiple sources seamlessly without the need to maintain multiple copies. This approach not only enhances data governance and security but also facilitates real-time insights and personalized customer experiences.