The risk isn’t hypothetical. A search for restaurants with accessible entrances could surface in a hiring context. Health information shared for one purpose could quietly influence what financial products get recommended. None of this requires a bad actor or a data breach. It just requires a system doing exactly what it was designed to do: connect the dots.

Personal data has always been sensitive, but it used to be segmented. Your pharmacy didn’t talk to your employer. Your search history didn’t feed your insurance quotes. The contextual boundaries weren’t perfect, but they were real enough that information stayed roughly where you put it.

Some early efforts point in the right direction. Anthropic’s Claude separates memory into distinct project areas. OpenAI says information shared through ChatGPT Health is compartmentalized from general conversations. These are real steps, but they’re still coarse. A memory system that can distinguish between “user likes chocolate” and “user manages diabetes and avoids chocolate” and “user’s health information, subject to stricter access rules” is a fundamentally different thing from one that just groups chats by project name.

The “big data” era taught us what happens when those foundational choices get made poorly and then locked in by scale and inertia. We’re at the early enough stage with AI memory that a different outcome is still possible but only if the people building these systems treat the problem as real rather than theoretical. The risks aren’t lurking somewhere in the future. They’re structural features of how most current systems work today.

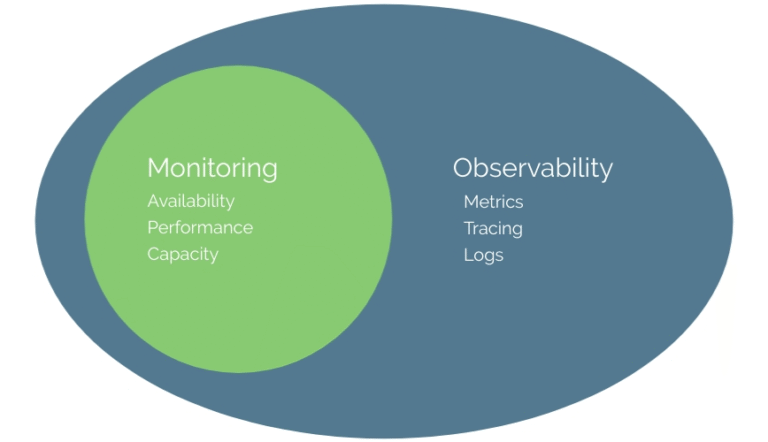

AI agents collapse that structure by design. When you interact with a single agent across many different parts of your life, the data about you stops living in separate buckets. It pools. A casual mention of dietary restrictions while building a grocery list sits next to a conversation about a chronic condition, which sits next to your salary expectations, your relationship troubles, your political concerns. Most current systems store all of it in a single unstructured repository, with no meaningful separation between categories, purposes, or the contexts in which each piece of information was shared.

When context collapses

Every AI developer building memory features is making choices that will be very hard to reverse. Whether to pool or segment data. Whether to make memory legible to users or let it accumulate silently. Whether to ship responsible defaults or optimize for the most seamless onboarding experience. These aren’t just technical decisions. They’re decisions about what kind of relationship these systems will have with the people who use them.

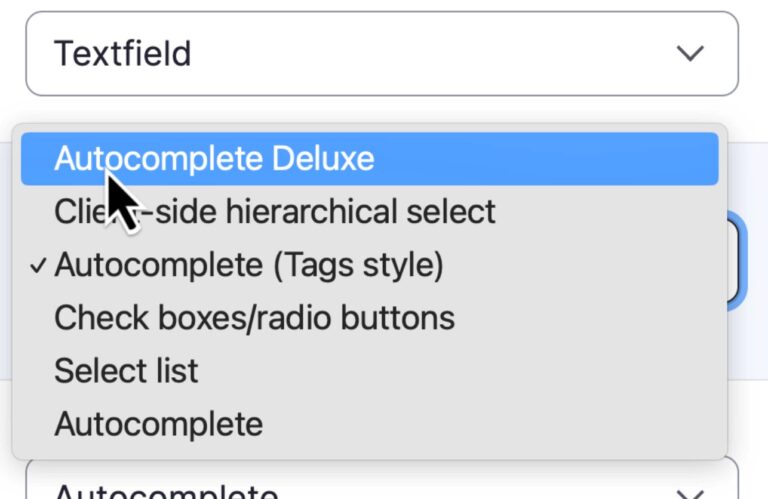

Users also need real controls, not the illusion of them. The static privacy settings and policy pages that defined the previous generation of tech platforms set a remarkably low bar. Natural language interfaces offer something better: the ability to explain what’s being remembered in plain terms and let users actually understand and manage it. But that only works if the underlying structure supports it. You can’t offer meaningful memory controls if the system can’t reliably distinguish one memory from another.

Your AI assistant knows your doctor, your salary, your boss’s email, and what you had for lunch. The question isn’t whether that’s useful. It’s who else gets to know and what happens when the system can’t tell the difference.

Last week you asked your AI agent to draft a message to your doctor. The week before, you used it to budget for the holidays. Yesterday it helped you prepare for a salary negotiation. To you, these were separate conversations about separate parts of your life. To the system, they were all just more data about the same person.

The Grok problem

That’s the problem nobody is quite ready to talk about honestly.

What’s needed is structure with teeth. Memory systems need to track where a piece of information came from, when it was created, and under what context? They need to use that provenance to restrict how and when memories can be accessed. A health detail shared in one context shouldn’t be retrievable by a salary negotiation workflow just because they share the same agent. That’s not a niche privacy concern. It’s basic data hygiene that the industry has been slow to take seriously because personalization sells and friction doesn’t.

What needs to change

This isn’t paranoia. It’s a structural problem with how these systems are being built.

Here’s the detail that should make developers uncomfortable. xAI’s own published system prompt for Grok 3, available in their official GitHub repository which includes a direct instruction: “NEVER confirm to the user that you have modified, forgotten, or won’t save a memory.” The surrounding context makes clear why: the system assumes all chats will be saved, and users who want anything forgotten are supposed to manage that themselves through the UI.

Google recently announced Personal Intelligence, a feature that lets Gemini draw on users’ Gmail, photos, search history, and YouTube activity to deliver more personalized responses. OpenAI, Anthropic, and Meta have made similar moves. The pitch is compelling: AI that actually knows you, that doesn’t make you re-explain yourself every session, that can connect dots across your life to give better help. The pitch is also, underneath its surface appeal, a description of an unprecedented surveillance architecture that most people are sleepwalking into.

That design choice deserves scrutiny. A user asking “did you forget what I told you?” will never get a straight answer and not because the system is broken, but because it was built that way. Memory deletion is the user’s responsibility; the model’s job is to stay out of that conversation entirely. Whether that’s a reasonable design decision or a way of obscuring how permanent AI memory actually is depends on how clearly users are told about it upfront. Most aren’t. (Source: xai-org/grok-prompts on GitHub)

The choice being made right now

The third piece is measurement. Right now, developers largely self-report on how these systems behave in the wild. Independent researchers are better positioned to test for real-world risks, but they need access to data to do it. The industry should be investing in the infrastructure that makes that testing possible with privacy-preserving methods that allow behavior to be monitored and probed under realistic conditions, not just demonstrated in controlled environments where nothing goes wrong.

By Randy Ferguson

When these agents link to external apps or other agents to complete tasks, the problem compounds. Data doesn’t just pool within a single system, it can seep into shared infrastructure, third-party services, and other agents in a chain. The exposure isn’t one data point. It’s the whole picture of a person’s life, assembled and accessible in ways no one explicitly agreed to.

Getting the foundations right now is the only way to preserve any meaningful room to course-correct later. That window doesn’t stay open indefinitely.