What is robots.txt?

Why robots.txt matters

- admin and technical pages

- internal search results and filtered URLs

- duplicate or automatically generated pages that were never intended for search results

- crawl budget (the limited attention search engines give your site) isn’t wasted on low‑value content

- important pages get the priority they deserve

- your site’s visibility in search results stays strong and undiluted

- server resources aren’t strained by unnecessary crawling

Managing robots.txt in Drupal

- new sections or pages: if you add an area of the site that should remain private (like a staging section, internal tools, or admin-only pages)

- removed or reorganized content: when URLs are deleted, or paths change

- new file directories or media: if you add folders for images, downloads, or scripts that don’t need to be indexed

- SEO strategy changes: for example, if you want search engines to focus only on certain sections, or if you restructure categories

- switching from single-site to multisite: each new site may need its own robots.txt file, especially if the content or structure differs (we’ll talk about specific options for multisite setups in more detail soon)

Robots.txt vs. other SEO tools

- Crawling is when search engine bots visit your website and read pages.

- Indexing is when search engines analyze a page, decide whether it should appear in search results, and store it in their index.

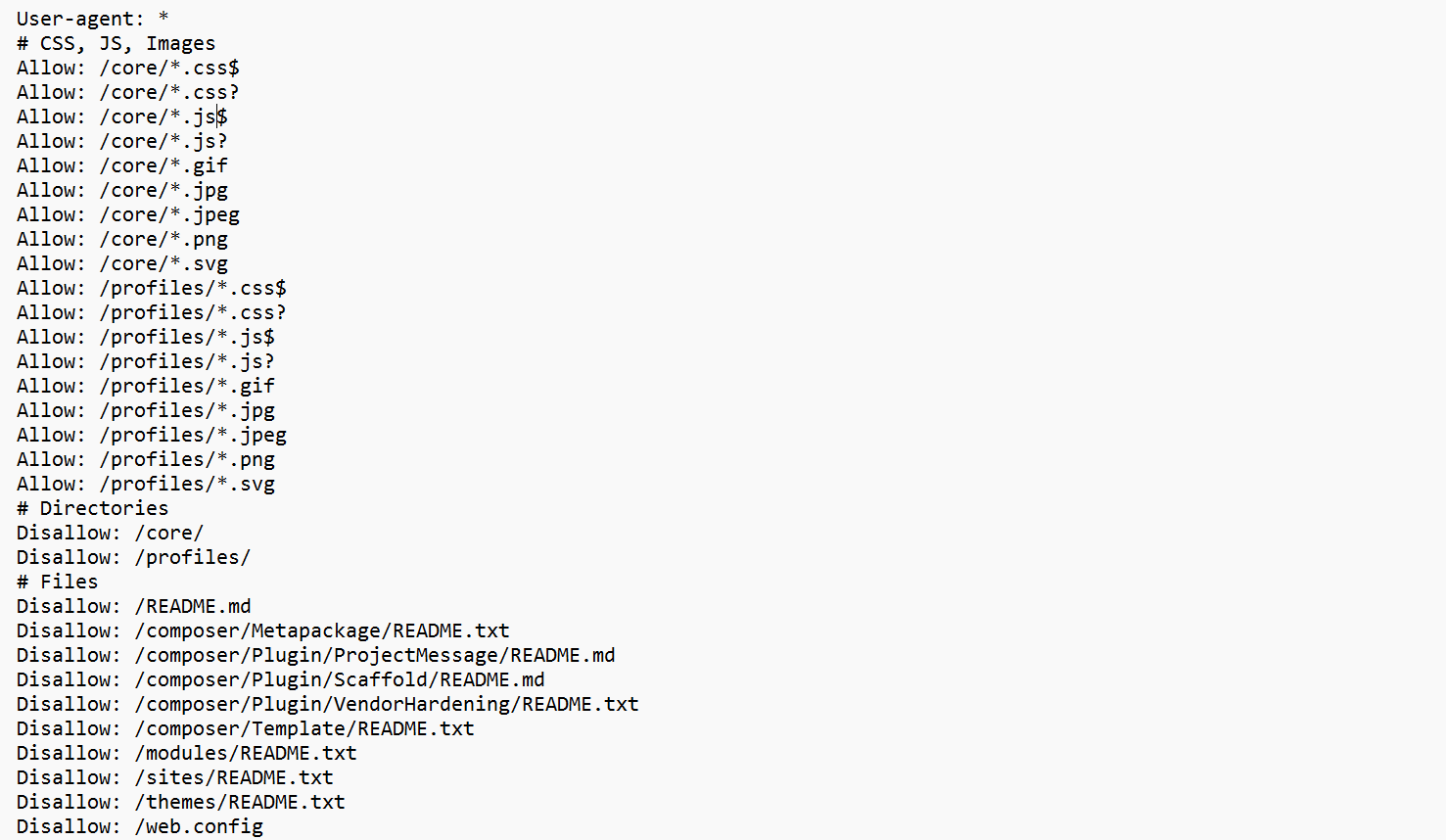

The “User-agent” directive: who the rules apply to

Clean URLs and compatibility rules

How to manage robots.txt via Drupal’s file system

- Open the robots.txt file in your code editor.

- Adjust or add rules as needed.

- Save the file, then deploy it to your server so the updated version becomes part of your live site. (In practice, this means publishing the file the same way you would with any other code change, for example, committing it with Git or uploading it through your deployment workflow.

- Verify the result in your browser. Visit https://example.com/robots.txt to confirm the new rules are visible and correct.

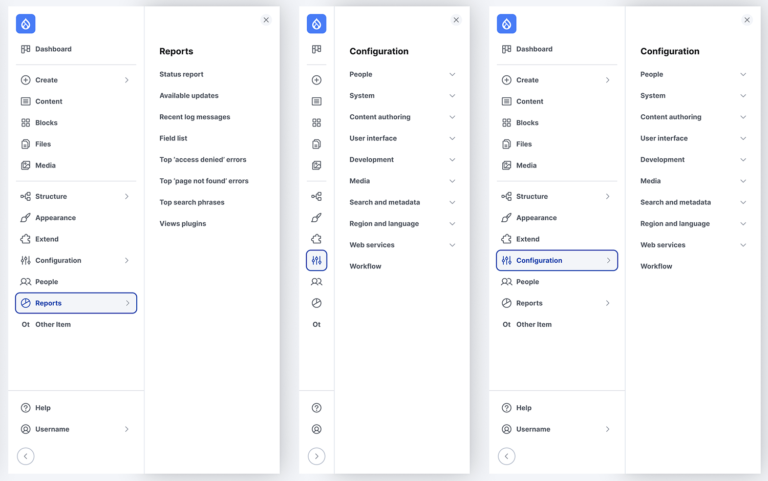

How to manage robots.txt via a Drupal module

Robots.txt influences indexing only indirectly. For example, if you block admin pages from crawling, search engines can’t read their content, so those pages usually won’t be indexed. But this isn’t guaranteed — a blocked page might still appear as a bare URL in search results if other pages link to it.

Best practices for robots.txt on Drupal sites

- Keep it simple. Only block paths that clearly shouldn’t appear in search results, such as admin pages, internal search, or technical directories.

- Avoid overcomplicating rules. Too many directives can confuse bots. Simplicity reduces errors and keeps bots focused on your important pages.

- Use trusted sources for rules. The default Drupal file is already safe and effective. There is also official search engine documentation where Google and Bing publish clear guidance on robots.txt syntax and best practices. SEO modules or generators can provide example rules and simplify configuration.

- Don’t block your entire site.

- Don’t rely on robots.txt for security, it only guides bots, it does not protect sensitive content.

Final thoughts