The first attempt was to copy/paste the text of an issue into chatgpt/claude/gemini, and ask the AI to list the names of the people to credit. Most tools silently cut the text when it goes over the context limit and only process part of it. Some can go back and process the rest of the file but it’s not automatic, they need to be asked.

Context

To handle large issues I tried various ways of processing the data. When the size of the text is higher than the available context I split things up.

Tools

The goal was to point the AI at a Drupal Core issue, and get a list of names to credit. We have guidelines to explain how credit is granted for Drupal core issues. In Drupal we value discussion, documentation, governance, triage as much as code, or at least we try hard to. I have the issue and user credit data in a local DB for all core issues since 2003 (data for drupal.org is under a BY-SA license). Sounds doable. Of the 10229 already resolved core issues, the majority (75%) have less than 6 people credited, it’s easy enough to figure out the credit in that situation, no need for LLM. Where I would really like help is the remaining 25% of issues, the ones with 197 comments and 75 users involved.

Online models

I had the best results with Option 3. It preserves the original text, which is helpful for finetuning.

I have 10k examples of core credit attribution, maybe it’s enough to finetune the various models and see how they improve? Maybe I can blunt the bias against non-code contributors? I grabbed the most recent 4000 issues from the dataset and finetuned all the models I already tested. That script was written by Claude Code because I didn’t know anything about finetuning. It took the better part of a week just to train and evaluate the various models, but it got there eventually.

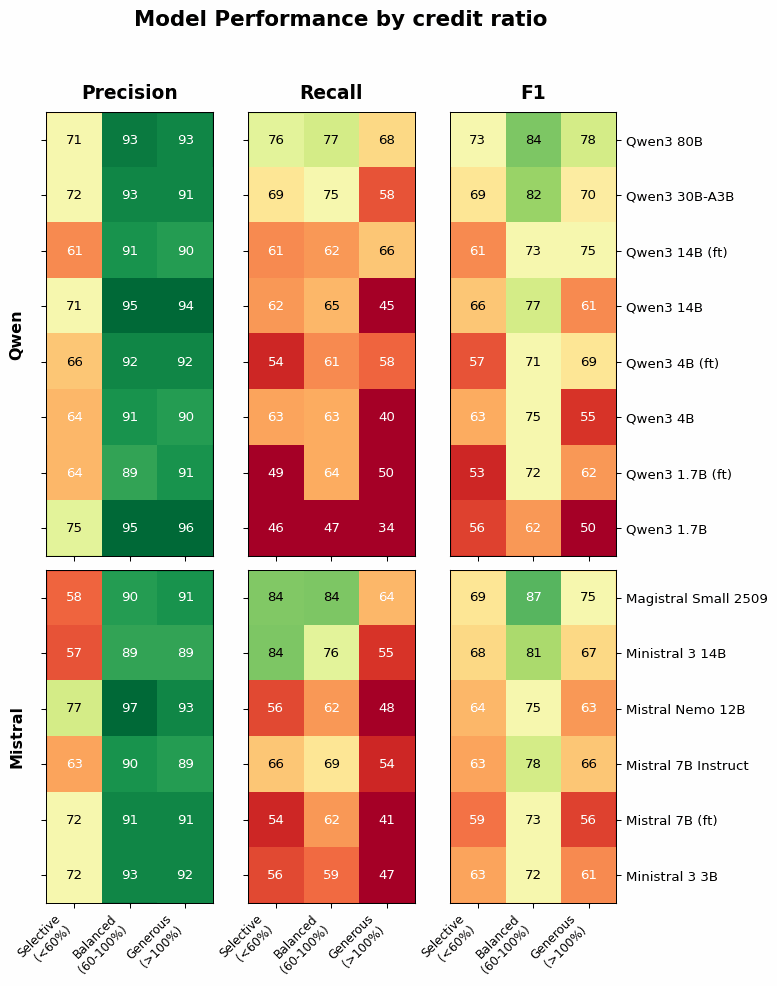

Before doing anything locally, let’s check the data. We need to define some ways to group and express the type of issue we’re working with. The main driver for the difficulty of credit assignation is the amount of people involved and the “credit ratio”: how many contributors are being granted credit from all the contributors that worked on the issue. To simplify a bit we’re only going to look at the credit ratio, we split issues in 3 bins:

Going local

Once we step away from the code and from LLM-assisted “yes-tools” and think about it for a second… this was a terrible idea from the beginning. If successfully implemented we would spend time evaluating the LLM output, not thinking about people that helped improve Drupal. The more we’d rely on this, the more we’d be chipping away at the community side of Drupal. I still believe in “come for the code, stay for the community”.

Data

I wrote a few things on Claude Code already, I’ll add a little more here: it can never be a substitution to domain knowledge. It might make it easy to take the first step and demystify a topic, but once you’re past that it’ll start being harmful to the project. It’s up to you to level up the domain knowledge. Nothing here is new, it’s all been said before, I’m just coming to terms with it more publicly than usual.

- Generous (12% of issues): More than 100% credit ratio: more people credited than people involved in the issue. This is usually when big initiatives are wrapped up, credit is ported from a duplicate issue, or issues to record a meeting. Generally anything that tracks contribution outside of the Drupal issue queue. This happens more in Drupal than probably anywhere else since we recognize non-code contribution better than other projects.

- Balanced (64% of issues): between 60% and 100% of the people involved in the issue receive credit. This is how most issues go, a group of people take the issue from start to finish, and a few others get involved to do triage, add +1, show support, raise a concern, a potential related issue, or duplicate a manual test or screenshot that was already there before.

- Selective (24% of issues): Less than 60% of people involved in the issue receive credit. In this category there are usually more than 8 people involved and about 45 comments to read through. It’s the hardest issues to credit, this is when it can take me 30 min to sort out the credit.

I only tested so called “open source” or “open weights” models: mistral, olmo, qwen. Using the same method as online models, I got comparable results. The open source models are not significantly worse than commercial ones at this task, it makes sense to explore further. Going local means I can control the environment and keep all the data private.

Prompts & Context management

Even with that improvement, it was still missing people and mis-crediting, especially on larger issues. Time to try things out locally.

As I’m writing this, I have 10 hours left on the finetuning of a 30B parameter model on a machine, and more models being evaluated on another. I said it before, it’s easy for me to get hooked on this type of problems. I don’t have a solution for reducing the code-centric bias. The technical failure show a larger problem: LLM logic is not compatible with Drupal community values.

- Aggregate activity by user and ask if they should be credited or not

- Have the LLM summarize user activity before evaluating credit

- Sending overlapping subsets of the issue activity and aggregating results

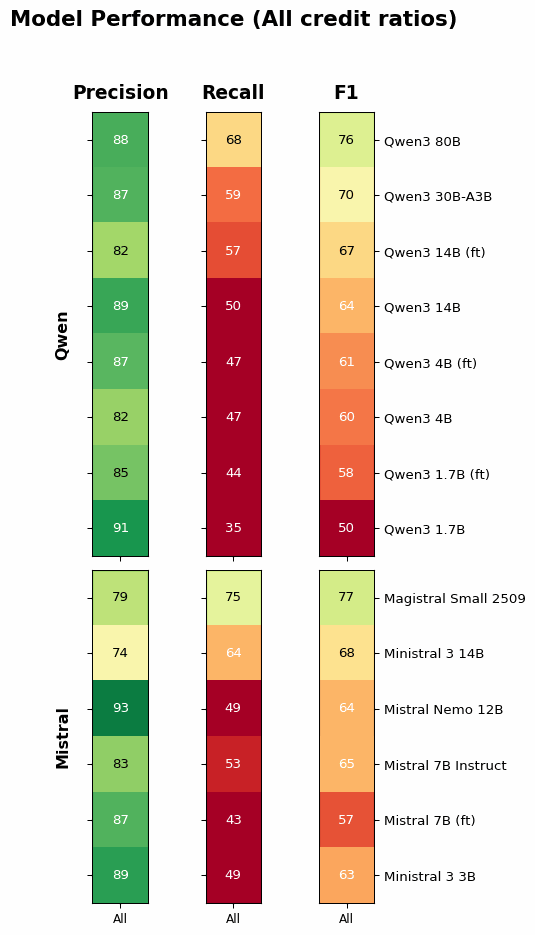

Let’s take a look at the data from all the evaluations. I had the various models evaluate 400 issues with an even spread across all bins. Some explanations:

Finetuning & Claude Code

As expected, the selective column is not looking too good. Selective issues are a real struggle for both humans and LLM, accuracy is low and recall is very low.

As a reminder I need help with selective issues, all the columns with bad results in them… Overall the precision is too low to be reliable, combined with the low recall, this is not useful. On selective issues about 1 in 4 suggestion is wrong. Models predict half the users, and the predicted half needs to be double checked. Based on the results making a LLM-powered credit suggestion tool is not going to be helpful.

Performance evaluation

Friends don’t let friends use LLM for activities where humans are valued.

- Precision: if the model identify a person to credit, how often is it correct?

- Recall: how many users did the model identify from the activity? If the model can’t identify people it can’t grant credit correctly

- F1: An aggregated metric that gives an idea of the performance of the model based on precision and recall values.

There is definitely room for improvement, I don’t know what I’m doing with finetuning. A proper data scientist will most likely improve all the metrics above. I’d be curious to see if the bias against non-code contribution can be resolved, what I tried did not tamper this bias at all. Also we’ve seen with the 80B model the difference the sheer size makes. Maybe with a 200+B model, precision and recall might approach usable territory. But at what cost in both time, hardware, and expenses. The bias will still be there however big the model is.

Results (in %)

We can see on this graph that most models behave the same way, precision not too bad, and bad recall. Let’s break that down for each type of credit ratio.

I don’t usually fail at making my life easier, but hey, it’s a whole new world lately. To try my hand at LLM during my trial of AI-assisted coding, I wanted to see if I could customize an LLM for a specific task: assigning user credit on Drupal Core issues. Depending on the complexity, activity, and number of contributors involved it can take me anywhere between 30 seconds and 30 minutes to assign credit when I commit an issue to Drupal Core. Maybe I could automate some of it?

- On easy to credit issues the performance is ok, might even be enough to use.

- The finetuning Claude Code came up with increases recall at the cost of precision

- The finetuning of Mistral has a problem, precision is increased at the cost of recall

When it worked, it had significant bias. It would only credit people that worked on the code, that was true even when feeding the credit guidelines in the prompt. I had to explicitly tell that non-code contributions also need to be credited since it’s valuable work. That helped a little but it wasn’t enough to overcome their training. It’s not a surprise this bias is built-in, it’s probably a side effect of all that GitHub code where code equals contribution. It was still surprising to me how deeply embedded it is in the current models.

The balanced and generous bins are easy to deal with. It’s when you have to go through a large issue keeping track of who did what that it gets complex and time consuming. For humans, as well as LLM as we’ll see below.

Take away

Despite knowing about the problems with the tech itself, the potential harm to the community the credit suggestion tool could bring, I’m still thinking about how to improve it, because of curiosity, because it’s a puzzle I want to solve. Add LLM tools that keep you in a constant state of stress, with multiple things going on, you stop thinking about the big picture and spend time on things that should never see the light of day.

We’ll get to the results, before that I want to come back to Claude Code, the terminal-based LLM “yes-tool” from Anthropic. I didn’t know anything about LLM training/finetuning, so I had Claude Code write and find out how to do things. It got me started relatively fast with working code. But as always when you do something without having the fundamentals, I started chasing my tail and wasted my time with more prompting instead of learning what I needed to get unblocked. I went back on the code earlier this week and with some basics, it’s going much smoother.

Once I had the data to evaluate the model performance, I tried different prompts and ended up with a system prompt containing the whole contribution guidelines. For “balanced” issues the results were not bad, for the “selective” issues it did not go well. At least half the users were missing with many false positives. I could really see the model’s bias against contributors who did not write code. It’s an uphill battle to make the model not ignore people who helped with the discussion, triage, or decision making.

The trap

Don’t forget to take a step back and think critically of your work.

It’s going to be some kind of LLM, but which one?

The largest model I can run (in a reasonable timeframe) is a 80B model, it performed the best overall, fine-tunned models included. Seems like the larger sized models have more space to contextualize content and are better at finding intent and not just pattern matching.