Oct 15, 2024

Ariffud M.

Ollama is an open-source tool that runs large language models (LLMs) directly on a local machine. This makes it particularly appealing to AI developers, researchers, and businesses concerned with data control and privacy.

By running models locally, you maintain full data ownership and avoid the potential security risks associated with cloud storage. Offline AI tools like Ollama also help reduce latency and reliance on external servers, making them faster and more reliable.

This article will explore Ollama’s key features, supported models, and practical use cases. By the end, you’ll be able to determine if this LLM tool suits your AI-based projects and needs.

Download ChatGPT cheat sheet

How Ollama works

Ollama creates an isolated environment to run LLMs locally on your system, which prevents any potential conflicts with other installed software. This environment already includes all the necessary components for deploying AI models, such as:

- Model weights. The pre-trained data that the model uses to function.

- Configuration files. Settings that define how the model behaves.

- Necessary dependencies. Libraries and tools that support the model’s execution.

To put it simply, first – you pull models from the Ollama library. Then, you run these models as-is or adjust parameters to customize them for specific tasks. After the setup, you can interact with the models by entering prompts, and they’ll generate the responses.

This advanced AI tool works best on discrete graphical processing unit (GPU) systems. While you can run it on CPU-integrated GPUs, using dedicated compatible GPUs instead, like those from NVIDIA or AMD, will reduce processing times and ensure smoother AI interactions.

We recommend checking Ollama’s official GitHub page for GPU compatibility.

Key features of Ollama

Ollama offers several key features that make offline model management easier and enhance performance.

Local AI model management

Ollama grants you full control to download, update, and delete models easily on your system. This feature is valuable for developers and researchers who prioritize strict data security.

In addition to basic management, Ollama lets you track and control different model versions. This is essential in research and production environments, where you might need to revert to or test multiple model versions to see which generates the desired results.

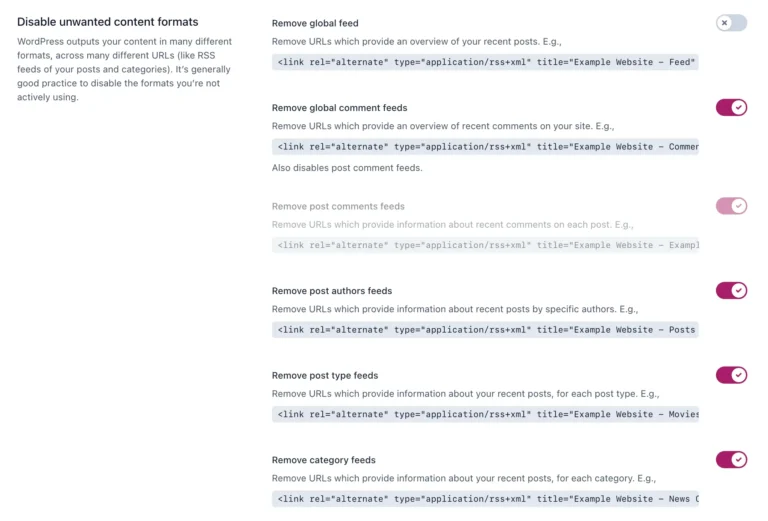

Command-line and GUI options

Ollama mainly operates through a command-line interface (CLI), giving you precise control over the models. The CLI allows for quick commands to pull, run, and manage models, which is ideal if you’re comfortable working in a terminal window.

Ollama also supports third-party graphical user interface (GUI) tools, such as Open WebUI, for those who prefer a more visual approach.

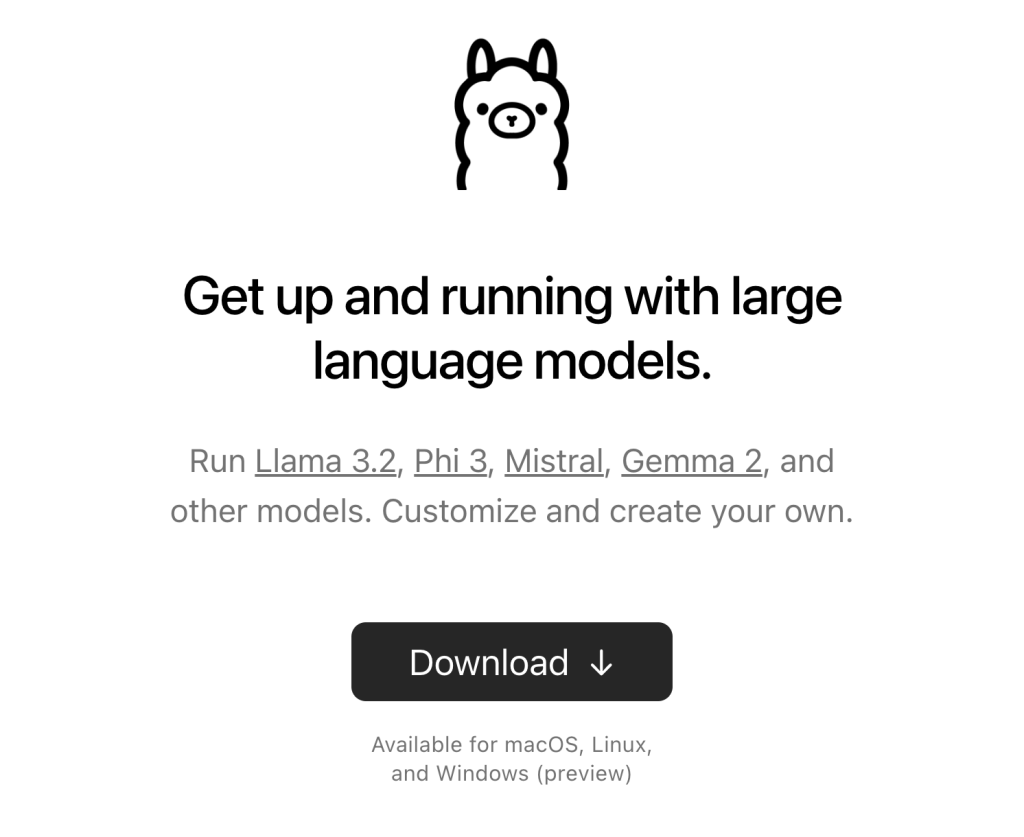

Multi-platform support

Another standout feature of Ollama is its broad support for various platforms, including macOS, Linux, and Windows.

This cross-platform compatibility ensures you can easily integrate Ollama into your existing workflows, regardless of your preferred operating system. However, note that Windows support is currently in preview.

Additionally, Ollama’s compatibility with Linux lets you install it on a virtual private server (VPS). Compared to running Ollama on local machines, using a VPS lets you access and manage models remotely, which is ideal for larger-scale projects or team collaboration.

Available models on Ollama

Ollama supports numerous ready-to-use and customizable large language models to meet your project’s specific requirements. Here are some of the most popular Ollama models:

Llama 3.2

Llama 3.2 is a versatile model for natural language processing (NLP) tasks, like text generation, summarization, and machine translation. Its ability to understand and generate human-like text makes it popular for developing chatbots, writing content, and building conversational AI systems.

You can fine-tune Llama 3.2 for specific industries and niche applications, such as customer service or product recommendations. With solid multilingual support, this model is also favored for building machine translation systems that are useful for global companies and multinational environments.

Mistral

Mistral handles code generation and large-scale data analysis, making it ideal for developers working on AI-driven coding platforms. Its pattern recognition capabilities enable it to tackle complex programming tasks, automate repetitive coding processes, and identify bugs.

Software developers and researchers can customize Mistral to generate code for different programming languages. Additionally, its data processing ability makes it useful for managing large datasets in the finance, healthcare, and eCommerce sectors.

Code Llama

As the name suggests, Code Llama excels at programming-related tasks, such as writing and reviewing code. It automates coding workflows to boost productivity for software developers and engineers.

Code Llama integrates well with existing development environments, and you can tweak it to understand different coding styles or programming languages. As a result, it can handle more complex projects, such as API development and system optimization.

Gemma 2

Gemma 2 is a multimodal model capable of processing text and images, which is perfect for tasks that require visual data interpretation. It’s primarily used to generate accurate image captions, answer visual questions, and enhance user experiences through combined text and image analysis.

Industries like eCommerce and digital marketing benefit from Gemma 2 to analyze product images and generate relevant content. Researchers can also adjust the model to interpret medical images, such as X-rays and MRIs.

Phi-3

Phi-3 is designed for scientific and research-based applications. Its training on extensive academic and research datasets makes it particularly useful for tasks like literature reviews, data summarization, and scientific analysis.

Medicine, biology, and environmental science researchers can fine-tune Phi-3 to quickly analyze and interpret large volumes of scientific literature, extract key insights, or summarize complex data.

If you’re unsure which model to use, you can explore Ollama’s model library, which provides detailed information about each model, including installation instructions, supported use cases, and customization options.

Suggested reading

For the best results when building advanced AI applications, consider combining LLMs with generative AI techniques. Learn more about it in our article.

Use cases for Ollama

Here are some examples of how Ollama can impact workflows and create innovative solutions.

Creating local chatbots

With Ollama, developers can create highly responsive AI-driven chatbots that run entirely on local servers, ensuring that customer interactions remain private.

Running chatbots locally lets businesses avoid the latency associated with cloud-based AI solutions, improving response times for end users. Industries like transportation and education can also fine-tune models to fit specific language or industry jargon.

Conducting local research

Universities and data scientists can leverage Ollama to conduct offline machine-learning research. This lets them experiment with datasets in privacy-sensitive environments, ensuring the work remains secure and is not exposed to external parties.

Ollama’s ability to run LLMs locally is also helpful in areas with limited or no internet access. Additionally, research teams can adapt models to analyze and summarize scientific literature or draw out important findings.

Building privacy-focused AI applications

Ollama provides an ideal solution for developing privacy-focused AI applications that are ideal for businesses handling sensitive information. For instance, legal firms can create software for contract analysis or legal research without compromising client information.

Running AI locally guarantees that all computations occur within the company’s infrastructure, helping businesses meet regulatory requirements for data protection, such as GDPR compliance, which mandates strict control over data handling.

Integrating AI into existing platforms

Ollama can easily integrate with existing software platforms, enabling businesses to include AI capabilities without overhauling their current systems.

For instance, companies using content management systems (CMSs) can integrate local models to improve content recommendations, automate editing processes, or suggest personalized content to engage users.

Another example is integrating Ollama into customer relationship management (CRM) systems to enhance automation and data analysis, ultimately improving decision-making and customer insights.

Benefits of using Ollama

Ollama provides several advantages over cloud-based AI solutions, particularly for users prioritizing privacy and cost efficiency:

- Enhanced privacy and data security. Ollama keeps sensitive data on local machines, reducing the risk of exposure through third-party cloud providers. This is crucial for industries like legal firms, healthcare organizations, and financial institutions, where data privacy is a top priority.

- No reliance on cloud services. Businesses maintain complete control over their infrastructure without relying on external cloud providers. This independence allows for greater scalability on local servers and ensures that all data remains within the organization’s control.

- Customization flexibility. Ollama lets developers and researchers tweak models according to specific project requirements. This flexibility ensures better performance on tailored datasets, making it ideal for research or niche applications where a one-size-fits-all cloud solution may not be suitable.

- Offline access. Running AI models locally means you can work without internet access. This is especially useful in environments with limited connectivity or for projects requiring strict control over data flow.

- Cost savings. By eliminating the need for cloud infrastructure, you avoid recurring costs related to cloud storage, data transfer, and usage fees. While cloud infrastructure may be convenient, running models offline can lead to significant long-term savings, particularly for projects with consistent, heavy usage.

Conclusion

Ollama is ideal for developers and businesses looking for a flexible, privacy-focused AI solution. It provides complete control over data privacy and security by letting you run LLMs locally.

Additionally, Ollama’s ability to adjust models makes it a powerful option for specialized projects. Whether you’re developing chatbots, conducting research, or building privacy-centric applications, it offers a cost-effective alternative to cloud-based AI solutions.

Finally, if you’re looking for a tool that offers both control and customization for your AI-based projects, Ollama is definitely worth exploring.

What is Ollama FAQ

What is Ollama AI used for?

Ollama runs and manages large language models (LLMs) locally on your machine. It’s ideal for users who want to avoid cloud dependencies, ensuring complete control over data privacy and security while maintaining flexibility in AI model deployment.

Can I customize the AI models in Ollama?

Yes, you can customize AI models in Ollama using the Modelfile system. This system lets you modify models to fit specific project needs, adjust parameters, or even create new versions based on existing ones.

Is Ollama better than ChatGPT?

Ollama offers a privacy-focused alternative to ChatGPT by running models and storing data on your system. While ChatGPT provides more scalability through cloud-based infrastructure, it may raise concerns about data security. The better choice depends on your project’s privacy and scalability needs.