The future of AI data access is unlikely to be purely one or the other. Most enterprises sit on a mountain of unstructured data (emails, PDFs, videos, chat logs, etc.) that won’t neatly flow through APIs. In fact, an estimated 70% of enterprise unstructured data is irrelevant, outdated, or duplicative for a given AI use case. Simply pointing AI agents at it will impact quality, costs and erode ROI. This is where a hybrid approach come into play.

Scraping approaches appeal to AI builders for one simple reason: speed. Why wait for formal agreements when you can point your pipeline at a web page or system and start ingesting? Why negotiate royalties when you can consume “public” data for free?

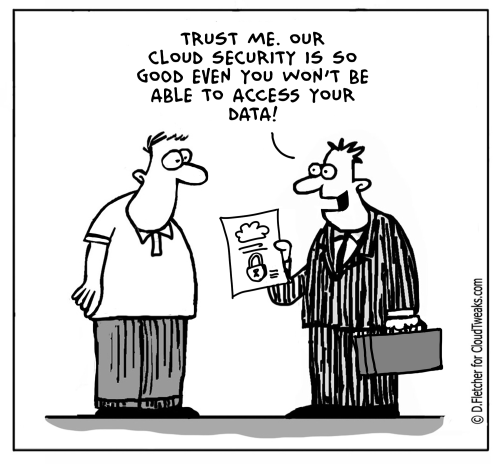

But for enterprise adoption, where governance, compliance, and trust are non-negotiable, official integrations are the only viable path. MCP servers, in particular, stand out as a promising standard. They separate the role of the data provider from the AI system that uses it, so each can change or improve without breaking the connection. At the same time, the interface stays consistent, making MCP servers highly adaptable for enterprises that need to maintain control while still enabling agentic AI interaction with their data.

The tradeoff is time and negotiation. A data provider, for example, a financial market feed, an HR system, or a cloud storage platform, may charge for access or restrict usage. Enterprises may need to invest in integration projects rather than simple scraping scripts. But the payoff is reliability, legal defensibility, and long-term alignment between AI builders and data stewards.

Why Access Matters: AI Without Data Is Just Math

In each case, the agent’s intelligence is only as good as the data it can access. And as enterprises seek to embed AI into more workflows, the ability to connect securely to the vast reservoirs of file and object data not stored in databases with proper governance, access controls, and auditing will be the difference between experiments and mission-critical deployments.

This mindset has fueled the explosion of RAG pipelines and browser automation techniques. For startups and research projects, it can be a pragmatic shortcut: data without red tape.

On the other hand, integration-first approaches work through APIs, webhooks, SFTP, SOAP/REST interfaces, GraphQL, or dedicated MCP servers. These favor control, stability, compliance, and often monetization. While this path can feel slower, it creates a more reliable and sustainable foundation for enterprise AI adoption.

The Temptation of Scraping

The choice isn’t just about speed versus structure. It’s about whether AI agents will be trusted copilots in enterprise workflows or remain risky experiments stuck on the fringes.

Enterprise IT organizations need to be aware that their corporate data may be exposed to AI agent scraping approaches unbeknownst to them. In this article, we explore the two ways AI agents can access corporate data, and the pros and cons.

AI agents are only as powerful as the data they consume. Whether they’re surfacing insights, powering faster medical diagnoses from analyzing radiology images or automating back-office workflows, access to relevant, high-quality data—especially unstructured data–is what makes them valuable.

The result is that while scraping may accelerate short-term innovation, it undermines long-term trust, adoption, and market viability. What looks like a free data pipeline today could become a litigation minefield tomorrow.

The Strength of Official Integrations

For enterprises, this is the more future-proof path. APIs allow for secure authentication, rate-limiting, and monitoring. Data can be curated, classified, and audited. Changes in schema or logic can be versioned and communicated. And as needs evolve, these integrations can evolve with them.

Are there cases where scraping is still the right call? Yes but they tend to be niche or transient. Researchers testing a hypothesis, startups prototyping quickly, or agents consuming genuinely public and license-free data may find scraping pragmatic.

AI without data is just math. And while scraping-first approaches offer speed and convenience, they are brittle and risky in the long run. Official integrations via APIs, webhooks and MCP servers offer the control, compliance, and adaptability enterprises need to trust AI at scale.

Where the Two Approaches Fit

About the author: Krishna Subramanian is the co-founder, president and COO of Komprise. In her career, Subramanian has built three successful venture-backed IT businesses and was named a “2021 Top 100 Women of Influence” by Silicon Valley Business Journal.

For example, a customer support AI can instantly surface a customer’s history of calls and messages and resolve issues without escalation. A supply chain AI plugged into IoT sensor data can predict equipment failures before they happen.

The Hybrid Future: Middleware and Unstructured Data Management

Official integrations, by contrast, provide structure. An API, webhook, or MCP server represents an intentional connection between the data provider and the AI system. These integrations are designed for stability, scalability, and governance, ensuring that data flows in a reliable and controlled way.

AI is only as good as the data it can access. While external data helps models stay current, internal data is often the most valuable because it reflects the unique knowledge, processes, and history of an organization. Without these sources, AI systems are stuck working from static training sets, which quickly become outdated. With them, AI can reason in real time, deliver context-specific insights, and produce results that are directly relevant to the enterprise.

The Bottom Line

Yet today, there’s a growing divide in how AI agents get that data: should they scrape or integrate? On the one hand, scraping-first approaches rely on whatever is publicly accessible, through retrieval-augmented generation (RAG) pipelines, browser automation, proxy networks, Web MCP, or other techniques that bypass CAPTCHAs, reverse-engineer interfaces, and circumvent rate limits. (Below is a helpful table defining these terms) The philosophy here is simple: collect anything you can and ask for forgiveness later.

But the drawbacks are substantial. First, scraped data comes with no guarantees of quality, stability, or coverage. Second, scraping methods are brittle, sites change layouts, CAPTCHAs evolve, and access can be blocked without warning. Most importantly, scraping creates legal and compliance risks. Enterprises are hesitant to rely on AI outputs derived from scraped content, wary of inheriting liability from derivative works.

Data management platforms that catalog, classify, and curate unstructured information will act as vital intermediaries. By filtering noise and shaping raw content into structured feeds, these solutions provide AI agents with just the right data for the right use case. This can reduce AI processing costs by as much as 70% by eliminating poor quality and sensitive data that can lead to compliance violations and harmful leaks.

The future likely belongs to a hybrid model: structured, governed integrations combined with a processing layer that organizes and interprets unstructured data. Enterprises that embrace this model will not only unlock more value from their AI initiatives but also insulate themselves from the compliance and legal risks that threaten less disciplined approaches.