Luckily, this one isn’t so heady: it’s just so obvious that we don’t see it anymore. We’re talking about alert fatigue, and why more companies don’t start there instead of levying hefty investments downstream to fix what should have been a simple upstream problem.

The best AI SOC platforms are designed specifically to handle these upstream problems, putting the whole security picture together to give SOCs neatly packaged, ready-to-action alerts. AI capabilities can:

By doing a few simple things – tuning alert detections properly, applying AI to contextualize alerts with environmental context, and prioritizing fixes by business impact teams, can cut down on excessive alerts and the myriad problems that comes with it.

Sometimes we get so stuck in the weeds that we forget to apply normal common sense, especially to heady cybersecurity principles.

Why Does Alert Fatigue Happen, Anyway?

Downstream triage also gets overwhelmed, so cutting off the upstream causes could really help. That’s the simple explanation. The less you give yourself to triage, the better. Other problems happen when you don’t squash mess at the source: you’re not only “dealing with it later,” but now chasing it (security incidents that got away) across complex hybrid and multi-cloud architectures, through security gaps, over multiple tools, and a step behind instead of a step ahead.

By Katrina Thompson

We’ll start with more of the obvious. Anyone in (or around) a SOC knows that they get way more alerts than they can possibly handle. On a daily basis. Forever. Thanks to AI, those numbers are now much worse.

- Built without environmental context, they miss key clues that would single out false positives.

- Build on bad thresholds (static ones), so they don’t adjust to spikes in traffic.

- Overlapping, creating redundancies: for example, three different triggers will fire on the same incident, creating three times as many alerts (oops).

Root-cause noise is the primary driver of analyst overload, and downstream triage cannot solve what upstream signal design breaks. But fixing those problems requires knowing what’s going wrong, and what right looks like.

Why Downstream Triage Doesn’t Work

But shouldn’t there be detection logic that’s built to catch some of the mess? Yes, only it’s not being designed properly. Poorly designed or noisy detection logic floods analysts with low-fidelity signals that require downstream triage rather than upstream fixes.

An ardent believer in personal data privacy and the technology behind it, Katrina Thompson is a freelance writer leaning into encryption, data privacy legislation, and the intersection of information technology and human rights. She has written for Bora, Venafi, Tripwire, and many other sites.

That’s because solving alert fatigue is about more than just making your SOC’s life easier. It’s about reducing the chances that you’ll miss the “big one” because you’re stuck in the weeds, or burn out your SOC, or run out of security resources, and end up getting breached. It’s making your business’ life easier – and that’s just common sense.

-

- Downstream triage treats symptoms, not causes. Only upstream improvements fix the pipeline to the point where it’s actually usable, and SOCs can depend and even action on it. We’re talking things like:

- Detection tuning

- Signal hygiene

- Better correlation

- Downstream triage treats symptoms, not causes. Only upstream improvements fix the pipeline to the point where it’s actually usable, and SOCs can depend and even action on it. We’re talking things like:

- Excessive alert noise gets in the way of downstream triage. Too many alerts lead to reduced vigilance, missed threats, and weakened operational performance, directly impacting business risk.

- Dealing with alert fallout downstream lets threats get ahead of you. This means increased dwell time (as you try and get to them all), delayed detection, resource strain, burnout, turnover, and the death of SOC credibility as teams are increasingly seen to do less as more security threats come in.

Fighting an upstream battle isn’t easy, but it’s better than chasing one downhill. The amount of alerts typical SOC solutions ingest is too much for even automated processes, much less manual ones. That’s why vendors are turning to AI to clean things up.

Pulling in AI to Reduce Noise at the Source

It gives attackers the advantage. It gives them a head start and more chances to move laterally and hide. It gives them the chance you’ll forget, or lose track of them, or not be fast enough. It opens up a whole Pandora’s Box of security hurt that could have been avoided. In more technical terms:

You’ve heard the analogy, also a poem: why put a fence up on the hill with an ambulance down in the valley? That seems to be the logic a lot of teams are operating on, and there’s just a better way.

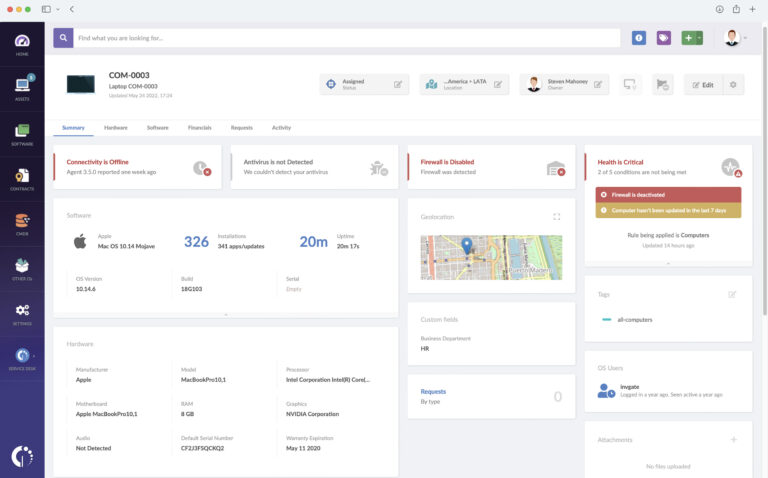

- Enrich alerts with context, collecting and aggregating telemetry from across complex cloud, hybrid, and multi-cloud environments and multiple tools (SIEM, EDR, identity management).

- Apply context-aware filtering: This means that a suspicious command executed by an administrator within a prescheduled change window will not trigger an alert in the same way that same command executed in other contexts would.

- Filtering repetitive false positives. Continuous learning, dynamic reasoning, contextual enrichment, and continuous learning allow AI SOC Analyst platforms to reduce false positives by up to 95%.

- Scoring events based on business impact and risk. AI is key to building a risk-based alert pipeline that not only delivers fewer alerts (and higher quality ones) but prioritizes them based on their overall importance to the business.

Sometimes, it’s the fault of an MSSP that has largely lenient logic, so it ensures “nothing gets missed.” Well, nothing does, but nothing much gets caught either. Too much is just as bad as not enough. Other times detections are:

Reducing Alerts: It’s Good for Business

The list goes on. All of these minor errors lead to one big problem down the road: overwhelming alert fatigue, and 62% of alerts being missed on purpose.

So, what can be done? Reduce noise at the source. And not surprisingly, AI can help with that.

AI reasoning and environmental context include things like exploitability, severity, and asset sensitivity and impact in the overall risk score, helping SOCs act based on business priorities, not severity scores alone.

As AI SOC Platform company Prophet Security states, “Reducing alert fatigue is a cross discipline effort. You need clean detections, reliable data, a crisp workflow, strong feedback loops, and metrics that guide decisions.”