Yet, the effectiveness of models can vary widely depending on which form the queries take – hence the importance of prompt engineering as a way of optimizing model operations. More specifically, prompt engineering helps ensure:

Next, we generate an additional set of model output scores using AI. We do this by asking models to assess the quality of responses from other models.

After recording both human assessments and AI assessments of model output, we compare the scores. If they’re similar, we know that AI models are effective in generating quality scores for a certain type of prompt. (If they’re not similar, we experiment with different models until we find ones that can score outputs with the same consistency as humans.)

By Eamonn O’Neill

The importance of prompt engineering

By extension, we can quickly identify prompts that result in low-quality output, without having to wait for humans to review the responses. In addition, by issuing the same prompt to multiple models and assessing the output automatically, we can easily determine whether a lower-cost model can achieve the same level of quality as a more expensive one for a given type of prompt.

Part of the appeal of AI models is that, unlike traditional applications, they don’t require specific types of input, such as commands that are spelled out in the form of computer code. Instead, models can respond to open-ended natural language queries.

- Accuracy: Carefully crafted prompts can reduce hallucination risks.

- Speed: More concise prompts can lead to faster results, since they include less data for a model to interpret.

- Cost management: The length and complexity of prompts can impact how much it costs for models to respond to a query.

- Effective model selection: Understanding how different models respond to different prompts can help organizations choose the best model for a given type of prompt – an important consideration given the large number of models that are now available, each with varying levels of performance and cost.

Quality scoring as the basis for automated prompt engineering

Again, one way to engineer prompts is simply to work through different iterations of the same request until a model delivers the desired results. But this is not a good way to achieve the best outcomes at scale.

A better approach is to treat prompt engineering as a systematic endeavor by automatically scoring AI outputs, then using the insights to craft better prompts over time. This is the strategy my company has adopted as we seek to integrate AI more deeply into our operations.

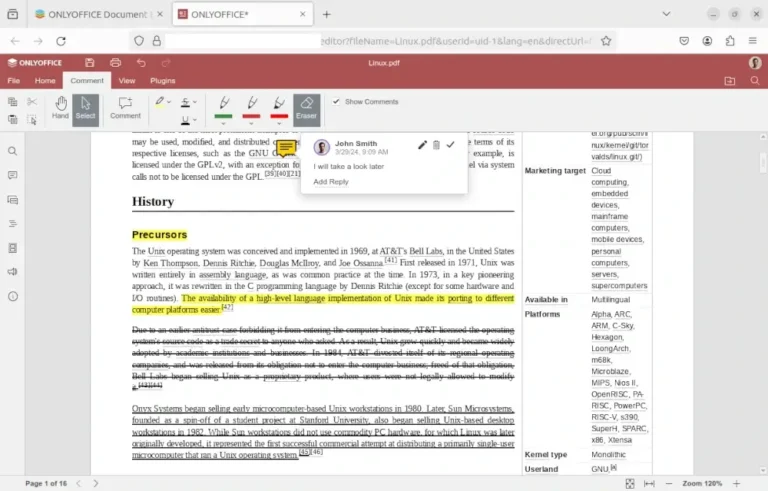

- Collect human feedback on model outputs

Based on the insights gleaned from the process I just described, we’re able to implement a highly structured and scalable prompt engineering strategy. Instead of simply educating employees on prompt-engineering best practices and hoping that they follow them, we can automatically determine when models receive low-quality prompts, or when we could save money by shifting a prompt to a lower-cost model without compromising on response quality.

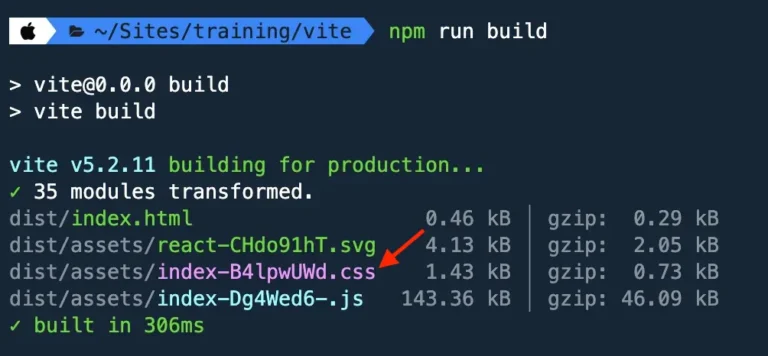

- Automatically evaluate outputs using AI

Once we reach this point, we’re in a position where we can really put AI to work in optimizing prompts. When AI models are able to rate model output with the same consistency as humans, we can process large volumes of prompts using AI – making it possible to scale and automate the process of quality scoring.

- Compare human vs. AI output scores

First, we ask employees to rate the effectiveness of AI responses to common queries. They score the output based on how accurate, complete and consistent it is.

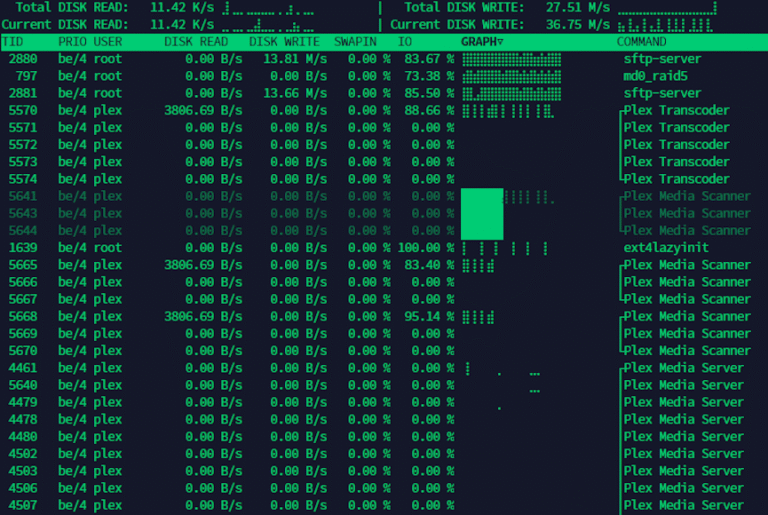

- Assess prompt quality at scale

We’re using this quality assessment system as part of an AI-driven solution for automatically modernizing legacy system code. But it could be applied to virtually any environment where organizations need to optimize prompts at scale – as many will as they make greater and greater use of AI, and as they navigate the increasingly complex ecosystem of AI models now available for enterprise use.

Prompt engineering – the practice of carefully managing input to AI models – is a hot topic today. Organizations are realizing that optimizing prompts is a key step in getting the fastest, most accurate results from AI.

A scalable, structured approach to automatic prompt engineering

Instead, businesses seeking to make the most of prompt engineering would do well to adopt a strategy wherein they systematically rate the quality of responses to varying prompts. My organization does this using the following steps.

Yet, too often, prompt engineering is essentially an ad hoc activity. It centers on manually iterating through different prompts until users land on the results they’re looking for – a process that might ultimately give users what they seek, but not in the most efficient or cost-effective manner.

Read on for the details of our process and what we’ve learned about building an automated, scalable prompt engineering solution.